📊 Data discrepancies occur when different sources report conflicting measurements for what should be the same metric, undermining research reliability and stakeholder trust

🔎 Common causes include human errors (misconfiguration, interpretation differences), tool inconsistencies, methodological variations, and poor integration between systems

⛛ Use triangulation, cross-validation, and regular data audits to identify discrepancies before they impact critical business decisions

🛡️ Prevent discrepancies by standardizing data collection, documenting protocols thoroughly, and consolidating research tools into unified platforms

🤏 Small discrepancies under 5% may be acceptable, but larger inconsistencies require investigation to protect research credibility

You’ve just finished analyzing results from your latest usability study when something catches your eye.

The task completion rate in your analytics dashboard shows 78%, but your session recordings suggest only 62% of users actually completed the task successfully. Your heart sinks as you realize you’re looking at a data discrepancy.

This scenario plays out more often than most UX researchers would like to admit. Data discrepancies can undermine the credibility of your research, lead to misguided product decisions, and erode stakeholder trust in your insights.

But here’s the good news: understanding what causes these inconsistencies and how to prevent them can transform the reliability of your UX research.

What is data discrepancy in UX research?

Data discrepancy refers to inconsistencies or contradictions between different data sources, measurements, or research findings within your UX research process.

It happens when multiple data points that should theoretically align tell different stories instead. These inconsistencies can appear in various forms:

👉 Conflicting metrics between different analytics tools

👉 Mismatches between quantitative and qualitative findings

👉 Variations in how the same metric is calculated across different studies

Sometimes the discrepancy is subtle, like slightly different conversion rates reported by two tools. Other times it’s glaring, like completely contradictory findings about user behavior.

The challenge is that data discrepancies don’t always announce themselves clearly.

They lurk in the details, waiting to surface at the worst possible moment, like when you’re presenting findings to stakeholders or making critical product decisions based on your research.

Example of UX research data discrepancy

Let’s look at a concrete example that illustrates how data discrepancies manifest in real research scenarios.

The checkout flow scenario

Imagine you’re conducting research on an e-commerce checkout flow. Your heat map tool shows that 85% of users click the “Proceed to Payment” button.

Meanwhile, your analytics platform reports that only 68% of users reach the payment page.

Your session recordings reveal something else entirely: many users click the button multiple times because of a loading delay, artificially inflating the click count.

The navigation study scenario

Here’s another common scenario. You run a tree testing study to evaluate your information architecture, and 72% of participants successfully find the product category they’re looking for.

But when you conduct follow-up interviews, participants express confusion about the navigation structure. The quantitative data suggests success, while the qualitative feedback indicates frustration.

These aren’t edge cases. They’re everyday realities in UX research that can lead you down the wrong path if you don’t recognize and address them.

What issues does data discrepancy cause?

Data discrepancies create a ripple effect that touches every aspect of your research process and its outcomes. The consequences extend far beyond simple measurement errors.

🚨 Impact on the quality of UX research insights

Reliability crumbles

When your data doesn’t align, the reliability of your insights crumbles. You might conclude that a new feature is performing well based on one metric, only to discover through another data source that users are actually struggling with it.

This inconsistency makes it nearly impossible to draw confident conclusions during UX research synthesis.

Time gets wasted

Data discrepancies also waste precious time. Your team spends hours trying to reconcile conflicting numbers instead of analyzing insights and making recommendations.

The research process that should take two weeks stretches into a month as you investigate why different tools are reporting different results.

Trust erodes

Trust in your research erodes when stakeholders receive conflicting reports. If your last presentation showed a 75% task success rate but today’s deck shows 68% for the same period, stakeholders start questioning all your findings.

Once that trust is damaged, even accurate insights face skepticism.

Progress tracking becomes impossible

The problem compounds when you’re trying to track changes over time.

If your baseline measurement is inconsistent with your follow-up study, you can’t accurately determine whether your design changes improved the experience.

You might celebrate a 10% improvement that’s actually just measurement variation.

🚨 Impact on business decisions

The real danger of data discrepancies lies in the decisions they influence. Product teams rely on your research to prioritize features, allocate resources, and set strategic direction.

When that research is built on inconsistent data, the entire decision-making foundation becomes unstable.

Costly rollback scenarios

Consider a scenario where discrepant data leads your team to believe a redesigned navigation is performing better than it actually is.

You roll out the change to all users, only to see support tickets increase and actual conversion rates drop.

The cost isn’t just in rolling back the change. It’s in lost revenue, damaged user trust, and team morale, all critical factors when implementing UX improvements.

Misallocated resources

Data discrepancies can also lead to misallocated resources.

If your metrics suggest users are abandoning at the payment page when they’re actually struggling earlier in the funnel, you’ll invest time and money optimizing the wrong part of the experience.

Meanwhile, the real problem remains unsolved.

Budget debates

Budget discussions become contentious when stakeholders question the validity of your research.

If finance sees one completion rate while product sees another, negotiations about resource allocation turn into debates about whose numbers are correct rather than productive discussions about improving the user experience.

Root causes of research data discrepancy

Understanding why data discrepancies occur is the first step toward preventing them. Let’s explore the main culprits behind these inconsistencies.

⚠️ Human errors

People make mistakes, and in UX research, these mistakes can manifest as data discrepancies.

Configuration mistakes

A researcher might misconfigure a tracking event, accidentally excluding mobile users from one measurement while including them in another.

Someone on your team might manually enter data from user interviews into a spreadsheet, making transcription errors that skew your findings.

Interpretation differences

Interpretation errors are equally problematic. Two researchers might code the same user feedback differently during qualitative coding for UX research, leading to conflicting conclusions about user sentiment.

One researcher defines task completion as reaching the confirmation page, while another counts it only when users receive the confirmation email.

These subtle differences in definition create significant discrepancies in reported success rates.

Time zone confusion

Time zone confusion is another surprisingly common source of human error. When your analytics dashboard uses Pacific time but your testing tool uses UTC, date ranges don’t align properly.

You end up comparing different time periods without realizing it, leading to discrepancies that seem inexplicable until you discover the underlying cause.

💡 Pro Tip

Always screenshot or note the timestamp and time zone when pulling data from any tool. Add a “Data collection details” section in your research templates that includes: date range, time zone, tool version, and any filters applied. Future you will thank present you when reconciling data across studies.

⚠️ Inconsistencies caused by UX research tools

Different tools measure the same thing in different ways, and this fundamental reality creates many data discrepancies.

Different measurement standards

Your analytics platform might count a session as 30 minutes of inactivity, while your heat mapping tool uses 15 minutes. The same user behavior gets counted as one session in one tool and two sessions in another.

Multiple tool problems

Having multiple tools in your research stack compounds this problem. When insights are scattered across platforms and you’re constantly jumping between them to piece together the full picture.

Each tool has its own way of handling data, its own definitions, and its own potential for technical glitches.

That’s why choosing the best UX research tools that integrate well is crucial for maintaining data consistency.

💡 Pro Tip

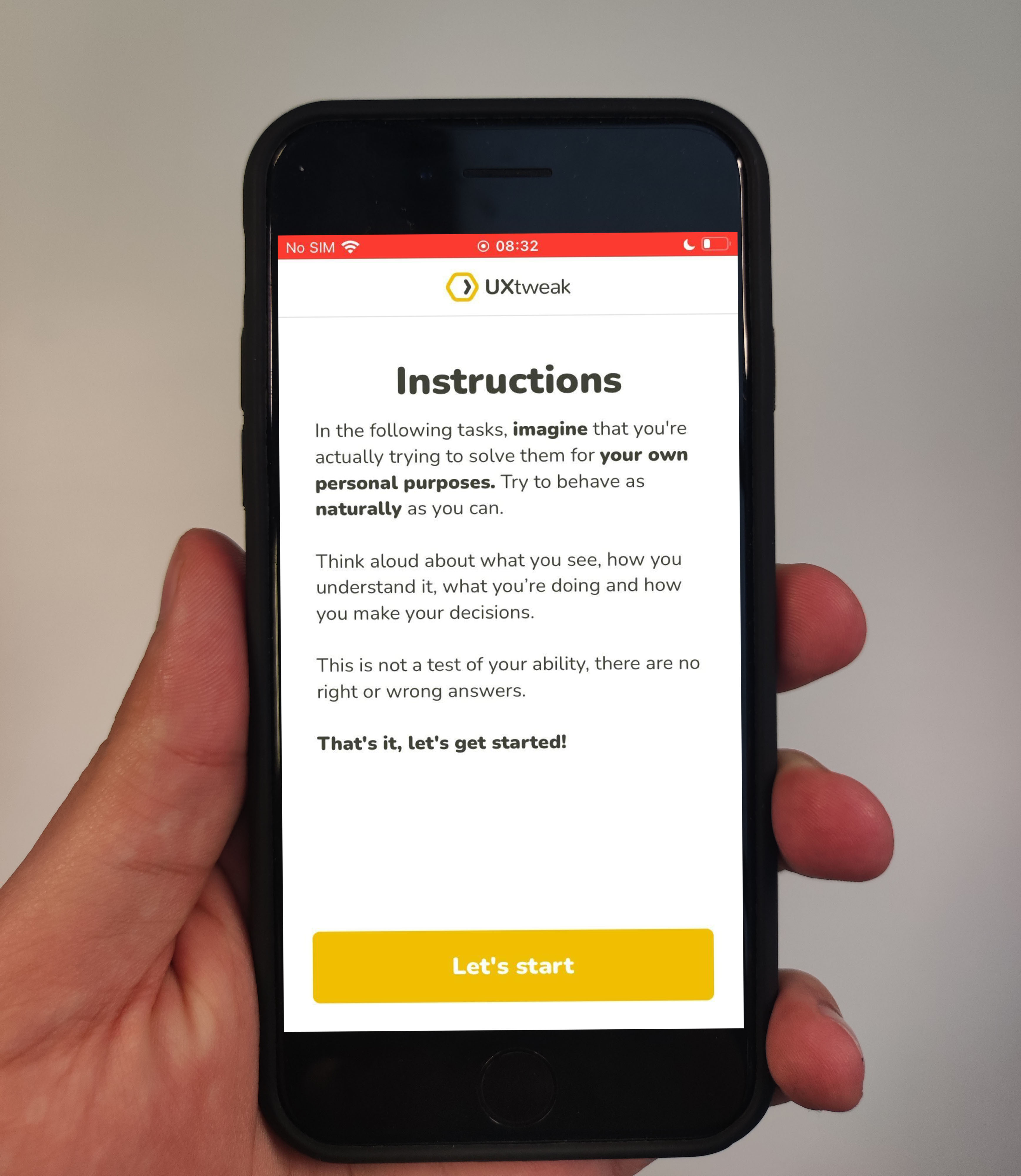

It’s better to opt for the all-in-one research tools like UXtweak, that maximize the amount of analytics you might need in one platform. This way you lower the chance of data discrepancies and don’t have to jump through countless tools to collect insights.

Privacy and blocking issues

Third-party scripts and ad blockers introduce another layer of complexity. While your session recording tool might track 1,000 visitors, privacy-conscious users with ad blockers prevent certain scripts from running.

Your analytics tool might only see 800 of those visitors, creating a 20% discrepancy that has nothing to do with actual user behavior.

No central repository

The lack of a centralized research repository means you’re manually combining data from multiple sources, each with its own export format and calculation method.

By the time you’ve normalized everything into a single report, opportunities for inconsistency have multiplied.

⚠️ Methodological errors

Sometimes the research design itself introduces discrepancies.

Moderation effects

Running an unmoderated usability test and a moderated one on the same feature often produces different results, not because of data measurement issues but because the methodologies fundamentally differ.

Participants behave differently when someone is watching versus when they’re alone. Different UX research methods naturally produce varying results based on their approach.

Sample bias

Sample bias creates discrepancies between studies. Your first study recruited participants through your customer database, skewing toward power users.

Your second study used a panel service, including more first-time users. The difference in findings isn’t about measurement error but about fundamentally different user populations.

Task design inconsistencies

Task design inconsistencies matter too. If one usability test gives participants specific scenarios while another uses open-ended exploration, you’re comparing apples to oranges.

The reported completion rates will differ not because of the interface but because of how you structured the research.

⚠️ Issues caused by poor integration

When your tools don’t talk to each other, data gets lost in translation.

Manual transfer problems

You export a CSV from your survey tool, manually import it into your analytics platform, and somewhere in that process, date formats change, responses get truncated, or records get duplicated.

API limitations

API limitations mean that even when tools theoretically integrate, they don’t always sync the data you need.

Your project management tool might integrate with your research repository, but key metadata fields don’t map properly. You end up with incomplete data sets that don’t tell the full story.

Version control chaos

Version control problems emerge when multiple team members are working with data simultaneously. One researcher is analyzing last week’s export while another is working with today’s data.

When you compare findings, discrepancies appear because you’re literally looking at different data sets, though neither of you realizes it.

How to find discrepancies in UX research data

Identifying data discrepancies requires systematic approaches that go beyond casual observation. Here are proven methods for uncovering inconsistencies before they undermine your research.

💡UX research triangulation

Triangulation in qualitative research involves collecting data through multiple methods to cross-validate your findings.

When you combine quantitative analytics, qualitative interviews, and behavioral observations, inconsistencies become visible.

The key is designing your research with triangulation in mind from the start.

Plan to collect the same insight through different channels. If you want to understand task completion, measure it through analytics, observe it in usability tests, and ask about it in surveys.

When all three align, you can be confident. When they don’t, you know where to dig deeper.

💡Cross-validation

Cross-validation means checking findings against multiple data sources.

📌 Example: If your primary analytics tool shows a 15% bounce rate increase, verify that against your secondary tool, your server logs, and your session recordings.

If they all confirm the trend, it’s real. If one contradicts the others, investigate why.

This approach works particularly well for catching tool-specific quirks. You might discover that one tool isn’t properly tracking single-page application navigation, or that another tool’s bot filtering is too aggressive.

These technical issues often explain discrepancies that initially seem mysterious.

💡 Pro Tip

When you discover a discrepancy, document it in a shared “known issues” log with the cause, affected metrics, and date range. Over time, this becomes an invaluable reference that helps your team quickly diagnose similar discrepancies instead of reinvestigating the same issues repeatedly.

💡Data auditing and visualization

Regular data audits involve systematically reviewing your data for anomalies. Look for unusual spikes or drops, unexpected patterns, or numbers that don’t make logical sense given what you know about your users.

A sudden 200% increase in mobile traffic deserves investigation, even if it’s technically possible.

Visualization makes discrepancies more obvious. When you plot multiple metrics on the same timeline, inconsistencies jump out.

If one line shows steady growth while another shows a sudden drop during the same period, you’ve identified a discrepancy that deserves attention.

💡Consistency checks

Implement routine consistency checks as part of your research process. Before finalizing any report, verify that your numbers add up. If you report 1,000 total sessions and break them down by device type, those segments should sum to 1,000.

If they don’t, you’ve found a discrepancy. Create simple validation rules for your data:

👉 Does the number of users who completed task A exceed the number who started it? That’s impossible and indicates a tracking error.

👉 Do percentages add up to more than 100%? Something’s wrong with how you’re calculating or how tools are tracking overlapping groups.

How to resolve data discrepancy issues

Finding discrepancies is only half the battle. Here’s how to address and prevent them systematically.

📍Standardize data collection

Create clear, documented standards for how your team collects and measures data. Define what constitutes a session, how you measure task completion, and what counts as an error.

When everyone uses the same definitions, internal discrepancies decrease dramatically.

Develop templates and protocols for common research activities. Your usability testing template should specify exactly how to configure recording settings, what metrics to track, and how to code observations.

This consistency ensures that studies conducted by different researchers produce comparable results.

📍Document study protocols and metrics

As W. Edwards Deming wisely noted,

Without data, you’re just another person with an opinion.

But we’d add that without documentation, data becomes opinion too.

Document not just what you measured but how you measured it, including tool versions, settings, and calculation methods. Tracking the right UX metrics for measuring user experience starts with clear documentation.

Your research documentation should be detailed enough that someone else could replicate your study exactly.

Include screenshots of tool configurations, copies of recruitment screeners, and step-by-step analysis procedures. This level of detail seems excessive until a discrepancy appears and you need to trace exactly what happened.

📍Invest in a versatile UX research tool

The most effective way to prevent tool-related discrepancies is consolidating your research stack. When all your data lives in one platform, you eliminate the inconsistencies that emerge from moving data between systems.

UXtweak offers an all-in-one research platform that keeps all your user insights in one place.

Data isn’t lost when transferring to other tools. Metrics and their calculations remain consistent, making results comparable across studies without manual adjustments.

When you conduct website testing, mobile testing, surveys, and card sorting in the same platform, you’re working with data that’s measured and calculated consistently.

📍Automate where possible

Manual data handling introduces errors. Wherever possible, automate data collection, transfer, and calculation.

Set up automated exports from your research tools to your analysis platform. Use scripts to standardize data formatting rather than doing it manually.

Let your tools calculate metrics rather than doing it in spreadsheets where formula errors can creep in.

Automation also creates consistency over time. The same script will process data the same way every time, while manual processes vary slightly each time a human performs them.

Those small variations accumulate into significant discrepancies.

📍Improve collaboration between teams

As Lilibeth Bustos Linares emphasized in our Women in UX podcast on collaboration in product teams,

Communication is essential for aligning team members towards a common goal. It involves active listening, open idea sharing, and constructive feedback.

This applies directly to preventing data discrepancies.

Regular sync meetings between researchers, analysts, and product teams help catch discrepancies early.

When the analytics team reports one thing and the research team reports another, collaborative discussion can uncover the root cause quickly.

Maybe they’re measuring different segments, or one team’s data is delayed, or definitions differ subtly.

💡 Pro Tip

Create shared documentation that all teams reference. When product, engineering, and research all use the same metrics dashboard with agreed-upon definitions, discrepancies become much less common. Everyone is literally looking at the same data, calculated the same way.

How UXtweak prevents data discrepancy in your research

UXtweak was built specifically to address the fragmentation and inconsistency that plague UX research. By providing a comprehensive platform for all your research needs, it eliminates the primary causes of data discrepancy.

✅ Comprehensive platform

The platform consolidates 11+ UX Research tools in one place. This means you’re not juggling multiple tools with different tracking methods, calculation formulas, and data export formats.

✅ Consistent methodology

All research methods within UXtweak use consistent participant tracking and metric calculations.

When you measure task success in a usability test and in a prototype test, the same logic determines what counts as success. This consistency makes it meaningful to compare results across different studies and research methods.

✅ Unified analytics

The platform’s integrated analytics ensure that all your metrics come from the same data source.

Everything is measured, stored, and calculated within UXtweak’s unified system, eliminating the discrepancies that emerge when combining data from multiple sources.

🔽 Ready to see it in action? Try UXtweak’s website and mobile usability testing tools!

Wrapping up

Data discrepancies represent one of the most insidious threats to reliable UX research. They undermine stakeholder confidence, lead to poor product decisions, and waste countless hours of your team’s time. But they’re not inevitable.

By understanding the root causes of discrepancies, whether human error, tool inconsistencies, methodological issues, or poor integration, you can implement systematic approaches to prevent them.

Standardizing your processes, documenting your methods, consolidating your tools, and fostering collaboration all contribute to more reliable, consistent research data.

UXtweak is here to help you consolidate your research findings and stop jumping between multiple platforms.

Try it for free today and see for yourself! 🐝

FAQ

How to check data discrepancy?

To check for data discrepancies, compare the same metrics across different data sources and look for inconsistencies. Use triangulation by collecting data through multiple methods (analytics, testing, surveys) and verify that findings align.

Create visualization dashboards that plot related metrics together, making anomalies easier to spot. Regularly audit your data for unusual patterns, sudden spikes or drops, and numbers that don’t logically align with user behavior or add up correctly mathematically.

What is an acceptable data discrepancy?

Small discrepancies (typically under 5%) are often acceptable and may result from different measurement methodologies, sampling variations, or timing differences between tools. However, larger discrepancies require investigation.

The acceptable threshold depends on your research context—discrepancies in critical metrics like conversion rates demand stricter standards than informational metrics. Focus less on eliminating every minor variation and more on ensuring that discrepancies don’t change the fundamental insights or decisions emerging from your research.

Is a discrepancy an error?

Not always. A discrepancy simply means different data sources show different values for what should be the same measurement. Sometimes discrepancies indicate errors in tracking, calculation, or methodology that need correction. Other times they reflect legitimate differences in how tools measure or define metrics.

The key is investigating each discrepancy to understand its cause. Some discrepancies reveal insights about your measurement approach or user behavior, while others indicate problems that need fixing to ensure reliable research.

📌 Example: If analytics show high engagement but interviews reveal frustration, you’ve found a discrepancy worth investigating.